Augmented Reality for Parkinson Speech Therapy

Overview

In this project, we – a group of five electrical engineering students – aim to develop an android app for usage in speech therapy for patients of Parkinson's disease. Additional to the well-known tremor, many patients suffer from impaired speech, as they tend to speak too loud or not loud enough and often begin slurring their words. In our case, we want to implement an app for AR-glasses to support speech therapy at home with accessible and instant feedback. The wearable application leads to a predictable microphone reaction, as the distance between the users mouth and the mic is always almost the same and nearly independent of the user's behaviour. This is displayed in the figure below. We want to use this advantage to process the audio information during speech therapy exercises and display the recorded level in a way to give the patient feedback on whether or not their speech is loud enough. Further development following our project might include formant recognition, other ways to determine in which way the patients' speech can be improved and help to do so.

In this project, we – a group of five electrical engineering students – aim to develop an android app for usage in speech therapy for patients of Parkinson's disease. Additional to the well-known tremor, many patients suffer from impaired speech, as they tend to speak too loud or not loud enough and often begin slurring their words. In our case, we want to implement an app for AR-glasses to support speech therapy at home with accessible and instant feedback. The wearable application leads to a predictable microphone reaction, as the distance between the users mouth and the mic is always almost the same and nearly independent of the user's behaviour. This is displayed in the figure below. We want to use this advantage to process the audio information during speech therapy exercises and display the recorded level in a way to give the patient feedback on whether or not their speech is loud enough. Further development following our project might include formant recognition, other ways to determine in which way the patients' speech can be improved and help to do so.

Implementation

|

|---|

| Nearly constant distance between the devices microphone and the patients mouth. Source: https://www.ubimax.com, modified by project members. |

The process of programming the application and running it successfully on the AR-glasses (model: Vuzix M300XL) was split into several steps.

First of all, the team members had to learn the basics of Android-app development. We used the starting phase of the project to get familiar with Android Studio, the IDE we chose to develop the app. During this time, several of us also had to learn the basics of Java, as we had no experience with this programming language.

Once we had our first apps running and reading the microphone data of the glasses, Prof. Schmidt introduced us to the elemental signal processing chain needed for our goal of a basic speech level analysis. He talked us through the theoretic basics of recursive filter design with the Levinson-algorithm and showed us a MATLAB implementation of an A-weighting-filter for pre-processing the microphone signal and furthermore the processing steps of noise power estimation and – following that – a speech power estimation. The last step was a voice-activity detection (VAD) based on the difference between the estimated speech- and noise power.

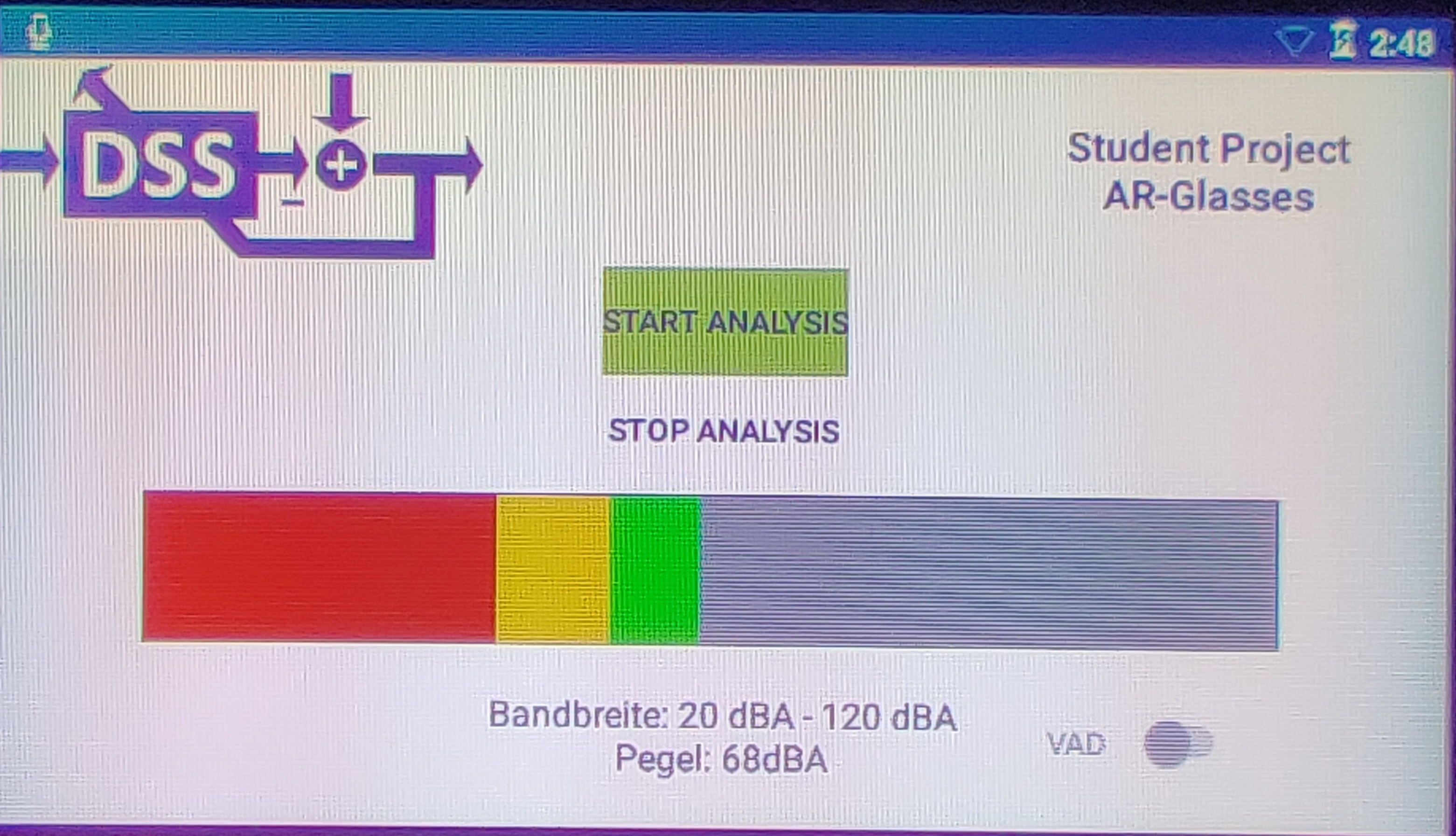

Next, we started implementing the digital signal processing blocks in Java inside an android application project. Doing this, we quickly realised that implementing the filter design in Java would be very complicated and inefficient, and since we would not need to change the filter once it was calculated satisfactorily we opted for implementing the filter vector in our Java project by importing it as a “.csv” file saved from the MATLAB filter design. We implemented the rest of the signal processing chain as a class so we could compute the speech level frame by frame from the microphone buffer in real time. This was realised using the “AsyncTask” extension for Java-classes in Android development, which makes it possible to run the calculations and analysis in the background while also returning the results for each frame and updating the graphical user interface (GUI). In the final version of our code, we use the following structure: a default activity (“MainActivity”) is running when the app is started, displaying buttons to start and stop the analysis, as well as a switch to turn voice activity detection on and off. This activity also makes sure that the app has the devices permissions to access its microphone and to use its memory and reads the filter vector into a java-array. Once the analysis is started by pressing the corresponding button, another activity is launched (“AnalysisTask”) which uses the “AsyncTask” extension to start the signal analysis. Firstly it launches a setup-function in our third activity (“AnalysisOutputSpLev”) which initializes the needed variables and computes everything that can be done before the real time level estimation starts (e.g. loading the filter vector into this class). After that, the activity writes the microphone input into a buffer and, once that is full, starts the Analysis-activity to process it. The last activity we created is called “LEDBarView” and is responsible for displaying the results in a bar that has red, yellow and green parts to symbolise whether or not the user is speaking loud enough.

|

|---|

| The graphical user interface (GUI) of our finished projects application. The coloured bar is now more prominent next to the buttons to start and stop the analysis and the switch in the lower right hand corner that enables the voice activity detection. |

After getting the whole app running, we calibrated the speech level to corresponding dBA-values. To do so, we saved the results of the analysis in an array which is written into a .csv file once the analysis is stopped. With the help of a normed sound level meter and a MATLAB script from our supervisor Finn, we were able to determine an offset of our resulting values of about 120 dBA, which we then integrated in our GUI. Shortly after that we presented our project to two speech therapists to hear their opinion on the possible use of our application and the accessibility regarding the AR-glasses. The feedback we got was to our delight very positive. Other than minor tweaks to the GUI, which we afterwards implemented as seen in the correspoinding figure, both were happy with the possibility that the app presented for future patients and thought, that the AR-glasses posed a good opportunity and an even bigger one, once it would be better integrated in more common consumer products or even regular prescription glasses.

Results and Outlook

We are pleased that we were able to realise our app design and get a first working version of the speech level analysis running. In the future, our project opens the prospect to further development. Building on the signal analysis in the app, the program could be extended to incorporate things like formant analysis and other functions to recognise slurring to further assist patients in practicing their speech therapy lessons. Furthermore, the appliance using AR-glasses makes it possible to facilitate the different sensors of it. This way, it might be possible to for example read out the gyro-sensors to record the patients' tremors. We are glad about the huge learning opportunity we got from this project, working as a team on a bigger scale programming file via SVN and getting in touch with the practical future prospects of our studies.

Participating Students

- Moritz Boueke

- Jan-Niklas Busse

- Jakob Jacobsen

- Tim Johannisson

- Yannik Lundt

Supervisors

- Finn Spitz

- Gerhard Schmidt

Picture Impressions

|

|

|

|---|---|---|

|

|

We were happy to welcome Sören Lange as a new member of our team at the beginning of the year. In his master’s thesis, he focused on signal processing for innovative underwater opto-acoustic sensor systems and has since then taken on new challenges in the field of magnetic sensors. His research interests span a wide range of topics, including signal processing and control engineering. With his friendly attitude, technical curiosity and expertise, Sören is a great addition to our team. We look forward to working with him!

We were happy to welcome Sören Lange as a new member of our team at the beginning of the year. In his master’s thesis, he focused on signal processing for innovative underwater opto-acoustic sensor systems and has since then taken on new challenges in the field of magnetic sensors. His research interests span a wide range of topics, including signal processing and control engineering. With his friendly attitude, technical curiosity and expertise, Sören is a great addition to our team. We look forward to working with him!